Voice AI Just Had a Big Week

PLUS: Privacy and safety changes at Anthropic and OpenAI worth knowing.

It's Friday again!

I've been using voice dictation more and more lately.

Turns out we can speak much faster than we can type.

I measured it and I'm ~2x faster at talking (138 words per min vs 65 when typing).

My tool of choice has been Wispr Flow, but I've noticed speaking to ChatGPT has also gotten better.

This isn't the sad experience you've had with Siri or Alexa. AI has made voice capture more accurate and intuitive.

For me, talking has become an easier way to give AI feedback, casually explore an idea, or braindump thoughts into notes.

Based on this week's news, it seems like others are shifting towards voice too.

1/ AI Settings Updates to Know About

What happened: Anthropic and OpenAI made updates to their privacy and safety measures which could impact you.

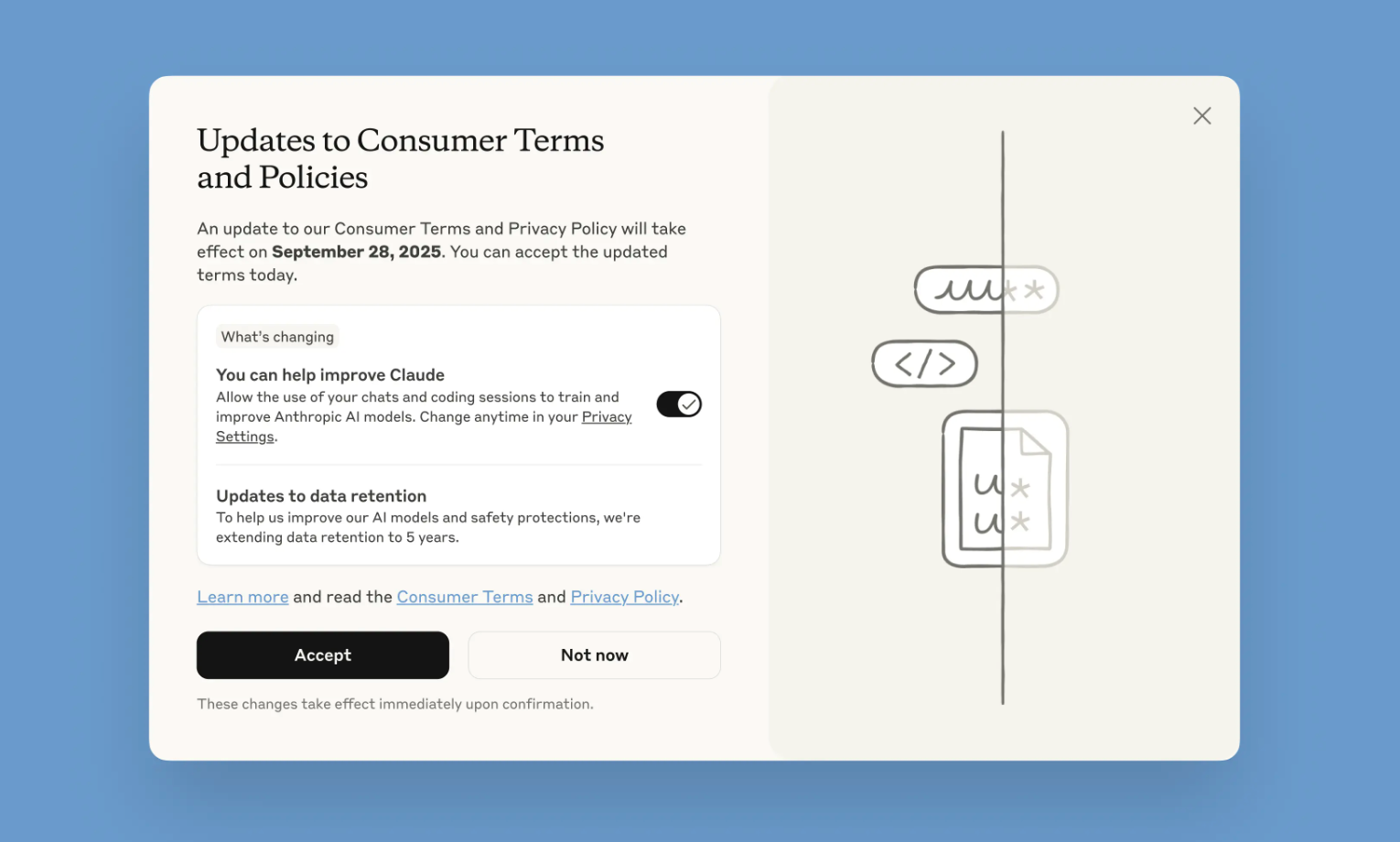

Anthropic Will Train Claude on User Convos

Anthropic will now train Claude models on your conversations, unless you opt out in your privacy settings by September 28. If you allow data sharing, Claude will retain your information for 5 years.

This applies to all consumer accounts, free and paid, with the exception of business and enterprise tiers.

The timing isn't a coincidence. Anthropic closed a $13B funding round this week, tripling its valuation to $183B. They'll need massive datasets to compete with OpenAI and Google.

OpenAI Adds Teen Safety Controls to ChatGPT

OpenAI is rolling out parental controls for teen users and will route sensitive conversations to more careful reasoning models.

Parents will be able to create linked accounts for kids 13+, set how ChatGPT responds to their child with behavior rules, manage memory and chat history settings, and get notifications if their teen is in distress.

Beyond controls for teens, ChatGPT will route all conversations it flags as potentially distressing to reasoning models that are designed to think strategically before responding.

2/ Spotlight on AI Voice Models

What happened: Voice AI took major leaps this week. Microsoft launched their first in-house speech models, OpenAI moved their voice API out of beta with image support, Google released a 70+ language conversation partner.

Microsoft's New Voice Model & Independence Play

Microsoft launched MAI-Voice-1 and MAI-1-preview, their first speech models built entirely in-house.

MAI-Voice-1 generates natural speech in less than one second and is already integrated into Copilot Daily and Podcasts. MAI-1-preview is in testing on LM Arena (a model testing and ranking site).

The story here isnt just it's speech model. Instead, it's its independence from OpenAI. Microsoft spent years relying on OpenAI's tech in a messy partnership. Now they're building their own AI stack.

OpenAI's Voice Upgrade

OpenAI moved its speech-to-speech model Realtime API out of beta with some big upgrades.

The new gpt-realtime model detects nonverbal cues like pauses and tone changes, switches languages mid-conversation, and handles image input during voice conversations.

Speech accuracy jumped from 65.6% to 82.8% on audio reasoning benchmarks.

Google's Translation Partner

Google released a bilingual AI conversation partner in beta, supporting over 70 languages with real-time translation.

Cohere's Enterprise Translation Model

Canadian AI org Cohere also launched a translation model: Command AI Translate, geared towards enterprise use.

They've claimed top scores on translation benchmarks with enterprise-grade security and GDPR compliance for business comms.

3/ Other Signals Worth Your Attention

- Amazon got an AI Shopping Upgrade. Amazon's AI Lens now includes real-time image search and live shopping features. Point your camera at something and Rufus helps you find and buy it immediately.

- An update on that Apple-Google AI deal mentioned last week. Apple reportedly struck a deal to use Google's Gemini model to power web search in an AI-upgraded Siri. They're targeting a spring 2026 release.