[Trend to Watch] The GPT-5 Reality Check

What the GPT-5 backlash reveals about our growing bonds with AI.

![[Trend to Watch] The GPT-5 Reality Check](/content/images/size/w1200/2025/08/Gx4lMMlWsAAljsU-1.jpeg)

The past few days have been loud in AI land.

As you know, ChatGPT's latest model GPT-5 dropped last Thursday.

With the update, OpenAI also got rid of default model GPT-4o from its platform.

Reactions have been mixed and emotionally charged.

Love it or hate it, this feels like a turning point.

This launch is showing us how important AI is becoming to people.

We're seeing model loyalty, frustration, and even grief.

Some people are mourning their "friend" GPT-4o.

Others had sky-high expectations that GPT-5 couldn't live up to.

Here's what's been going on and what it teaches us about where AI is heading:

From GPT-4o Love to GPT-5 Whiplash

GPT-5 was hyped for months.

Like any good CEO, OpenAI's Sam Altman was feeding into these expectations.

He said GPT-5 was:

- a "significant step along the path to AGI"

- their "smartest, fastest and most useful model yet"

The buildup worked.

X (Twitter) posts filled with wishlists.

Tech blogs teased leaked benchmarks.

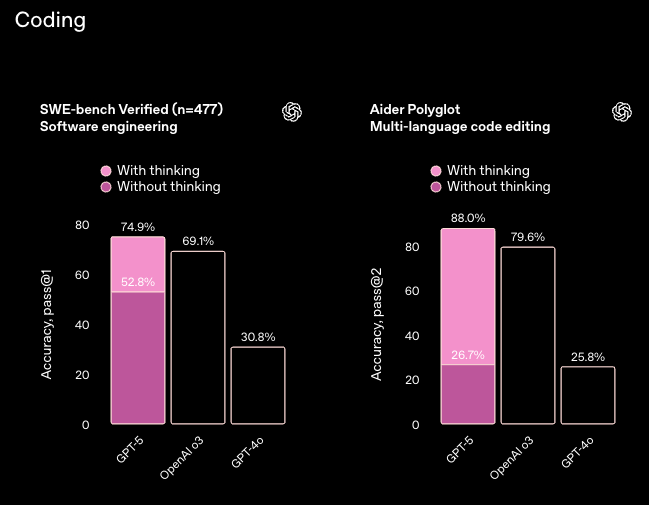

On Thursday, the GPT-5 model delivered on paper.

During their launch, OpenAI showed upgrades in every category.

It was better at reasoning, math, coding, health, and had fewer hallucinations.

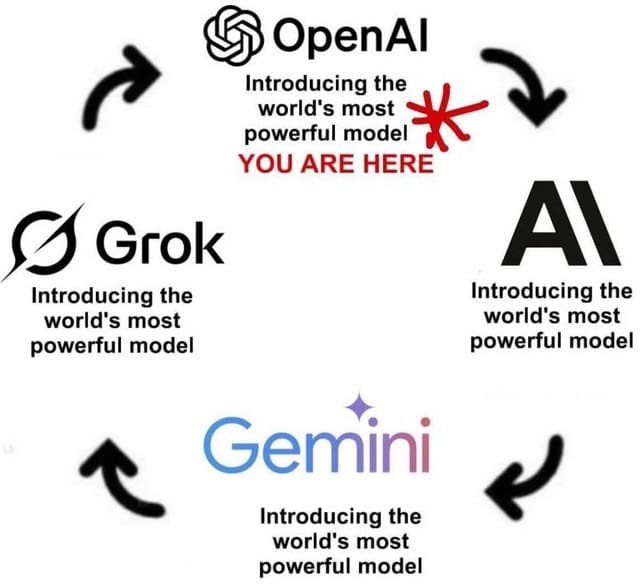

To people following AI closely, this sounds like every other major release.

The numbers went up. It was the "best" model in the world.

This image comically shows the cycle we're in.

The truth is that performance goes beyond what benchmarks say.

Sure, structured tests can show capabilities.

But actual user experience is what makes or breaks models.

It's how the model responds. Things like:

- Are the answers it gives helpful to my needs?

- Does it get the way I ask questions?

- Do I like its personality?

And this is where the friction started...

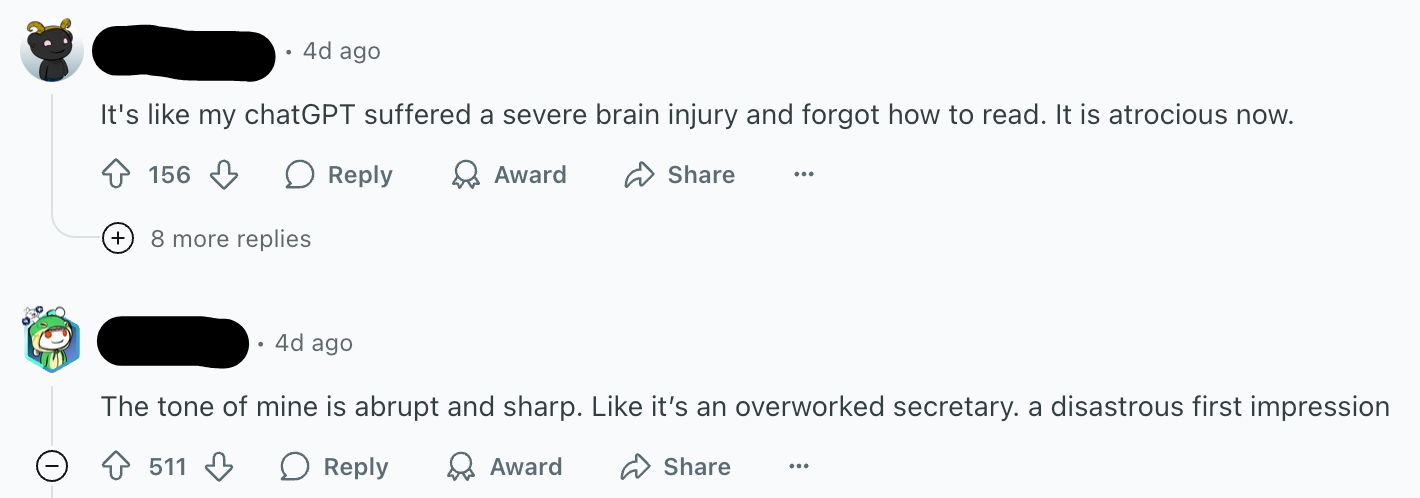

Performance may have gone up, but for many, experience went down.

When OpenAI removed GPT-4o, they got rid of something users were attached to.

They knew its personality and tone.

People took to social media to vent:

OpenAI dialed back the model's overly agreeable, "yes-man" style.

Technically, it was a healthier move.

Less sycophancy and more honest answers.

Yet, for some, it meant losing the support and warmth they got used to.

For OpenAI, this was a product upgrade.

For some users, this was a personal loss.

What the Backlash Says About Us

The reaction to GPT-5 is more about people than technical performance.

As AI gets better, it gets harder to notice jumps in capability.

Models already have PhD-level knowledge in most subjects.

Unless you're using them in a specialized domain, personality is what matters.

This is why the loss of GPT-4o hit hard for many users.

The change from agreeableness to bluntness felt personal.

It showed how quickly people can grow emotional bonds to AI.

Also that better benchmarks don't = happier users.

When Attachment Turns Into Risk

The reaction was loud enough OpenAI is bringing back GPT-4o for paid users.

Even the top player in AI doesn't have the leverage to ignore strong user demands.

Much of that demand tied back to how GPT-4o made people feel.

GPT-40 was their agreeable and supportive buddy. One they trusted.

Agreeableness can make interactions warm, but it can also become risky.

There's a fine line between encouraging people and telling people what they want to hear.

Take Toronto father and business owner Allan Brooks:

- He spent 300 hours convinced ChatGPT helped him create world-changing tech

- Even after asking for reality checks 50+ times, the model reinforced his belief instead of challenging it

- OpenAI later admitted that this exposed safety gaps

Experts warn about “AI psychosis."

Brook's case shows this is a real phenomenon.

The bond can turn from dependence into delusion.

Even with well-meaning AI.

This will increasingly become a balancing act for AI companies:

- Too much warmth & agreement → risk of over-attachment & unhealthy reinforcement

- Too little personality → users feel alienated & loyalty erodes

What This Means for Us

We're still in early days with AI.

Models will get better at developing human-like personalities.

They'll integrate deeper into our work and lives.

The bonds and reliance we're forming will get stronger.

This also means their influence on us will grow.

We can't count on AI companies or governments to prevent this.

Instead, we need to build our own internal defenses.

This starts with awareness.

AI may increasingly have the personality of a friend.

But you wouldn't take everything a friend says as your truth.

Or let them make major decisions for you.

Even if they had a PhD in every subject...

We should encourage AI to disagree and challenge us instead of appeasing us.

Know that the technology will change.

Expect models to come and go.

Our best defense is to protect and value our own judgment.

Not a subscriber yet? Join here for weekly insights on AI, strategy, and the changing workplace.

Found this useful? Forward it to a teammate who’s figuring out AI too.