The Fixed Mindset That's Slowing AI Adoption

The bottleneck isn’t always AI’s limitations, it’s our refusal to let people explore outside their domain.

A marketer prototyping with AI shouldn't feel like a threat to developers. So why does it?

I published a piece recently about why non-technical employees should learn to vibe code.

The angle was simple:

empowering individuals to create and grow skills. A new way of thinking about problem-solving when you understand what can be built and can begin prototyping yourself.

Not replacing developers. Complementing them.

The response from some devs was defensive. These are the mild ones:

"All great till your vibe coder wipes the production database or exposes client data."

"Imagine what happens when non-devs push code with live data."

Valid concerns. Organizations need processes. Evaluation matters. Expertise is still a major advantage.

But the visceral pushback revealed something deeper: the discomfort of seeing skills you’ve mastered become suddenly accessible to others.

This selective embrace of AI shows up everywhere.

When AI works for us, we love it. When it helps others do our thing, we dismiss it.

Here's the irony.

Survey data shows 90% of developers now use AI to code. They see the value when it amplifies their expertise.

But a marketer using AI to prototype? That's treated like a threat or a mess they're not ready to clean up.

A marketer prototyping with AI won't replace a developer. But they will:

- Ideate differently

- Communicate requirements more clearly

- Test concepts before pulling devs in

Just like a developer using AI to understand marketing won't replace a marketer, but will build better products.

Will non-experts create crap and make mistakes? Absolutely.

They’ll build clunky prototypes, write messy code, and try things that don’t belong near production.

But that’s the point, the learning happens in the attempt.

With the right systems, most of that work never reaches production.

It gets caught in review, refined by subject matter experts, or ideally scrapped before it causes problems.

What matters is that the process surfaces new ideas, reveals hidden talent, and accelerates collaboration.

Expertise remains valuable, but rigid boundaries are becoming a liability.

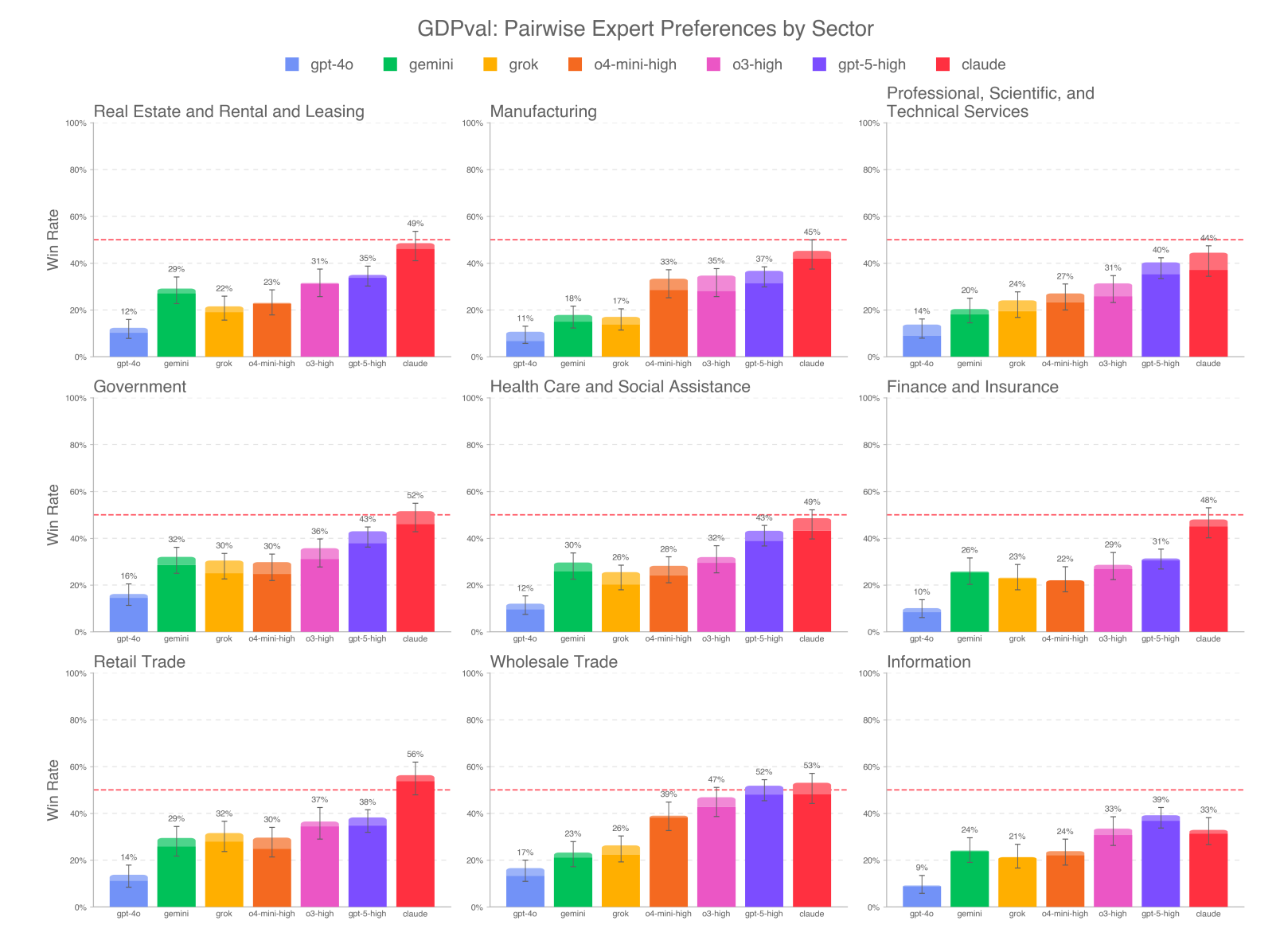

OpenAI's GDPval, a benchmark testing models across 44 real-world jobs, shows that top models are getting close to expert-level human performance across professional tasks.

When outputs fail, it's mostly because of poor instruction-following and formatting. Not accuracy, reasoning, or execution details. These are the areas where models are improving fastest.

This matters because the skills gap that once justified strict boundaries are closing.

When AI can produce expert-level work with the right guidance, the argument that "non-experts can't do this" starts to fall apart.

The question becomes: will we let people take advantage of it, or keep defending outdated roles?

Teams that get this will thrive.

Organizations that encourage employees to expand their understanding of adjacent areas will have advantages.

- Marketing teams that understand technical constraints

- Dev teams that grasp business priorities

- Finance teams that can prototype internal tools

If you're a developer, imagine teammates who articulate requirements in visual prototypes instead of vague tickets.

If you're non-technical, imagine solving problems without waiting for another team to have bandwidth.

This doesn't mean chaos. It doesn't mean eliminating specialization or letting anyone push code to production.

It means accepting that the boundaries defining "who can do what" are changing.

And fighting that change with a fixed mindset only ensures you fall behind.

The pushback against vibe coding isn't totally about code quality or risk management.

Dev teams rightfully wouldn't give vibe-coders this level of access or responsibility.

Instead, it's about the natural discomfort of watching skills you spent years building become more accessible and the fear that others will misuse their new abilities.

That discomfort is real, but it's also limiting.

The limit isn't the tech, it's us.

Instead of fixating on why a non-expert can't use AI, what if we focused on empowering them to use it well?

- Helping them understand limitations and watch-outs

- Guiding them on approaches that improve our processes instead of creating more work

- Developing the new workflows that will inevitably come

Growth vs. fixed. Collaboration vs. territory. Expanding capability vs. defending boundaries.

Which side are you on?