Stop Trying to Keep Up With Every AI Launch

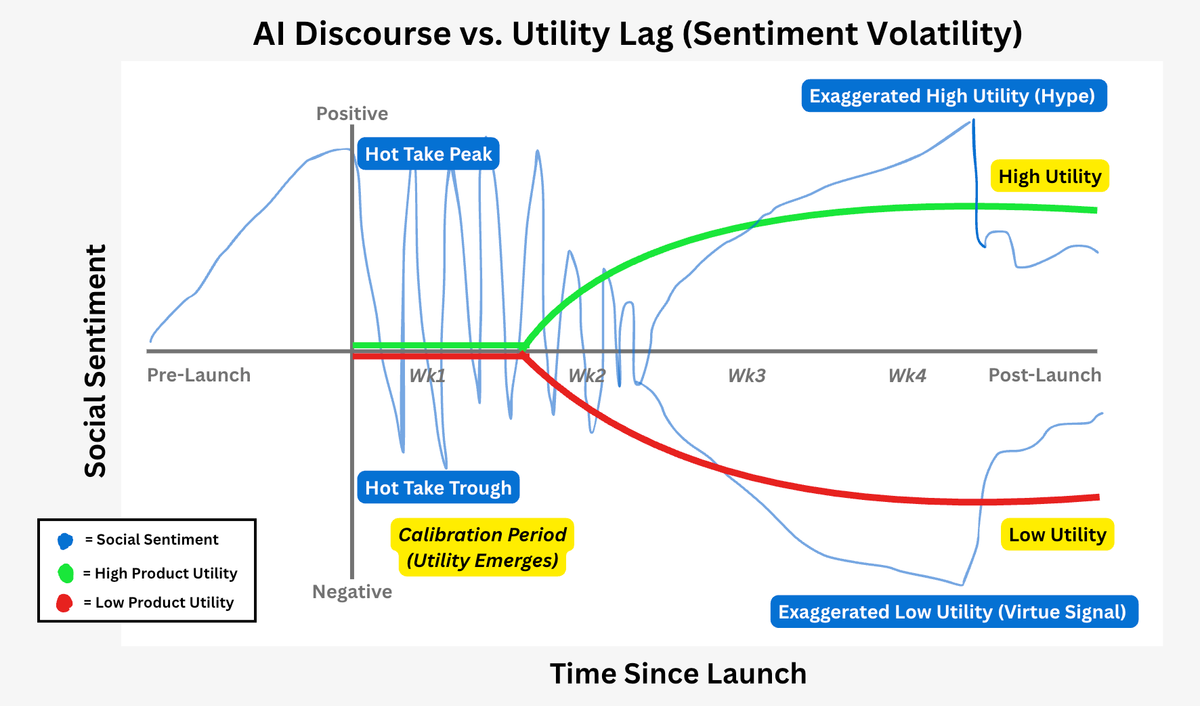

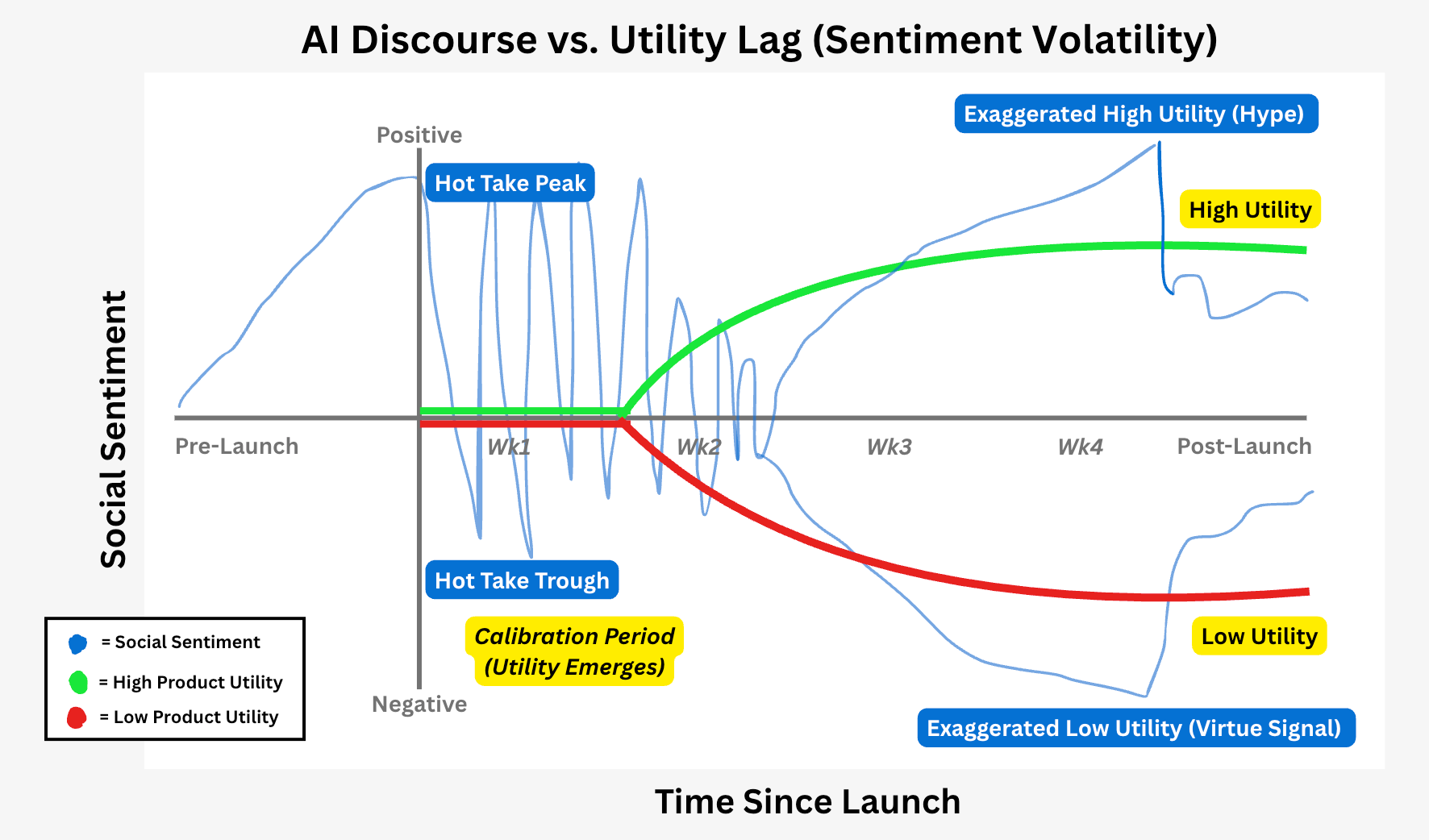

Hot takes peak week 1, validation shows up week 3. Here's how to use the lag as your filter.

Each time a new AI product drops, our feeds flood with takes.

Many of them are convincing.

It's easy to feel their pull.

What if this is the tool that matters?

What if everyone else figures it out before us?

So we context-switch.

Open up the latest tool and test it for a few minutes.

Feel accomplished.

Then do the same thing for the next big release.

We're testing everything to feel productive.

But we're killing our energy with surface-level use.

Social discourse always precedes utility.

Hot takes peak immediately.

Value only shows up weeks later.

Let other people test for you, then wait for the signal.

This Pattern Keeps Repeating Itself

Week 1:

Hot takes from people who have tested for an hour (if at all).

They're loud and volatile.

Positive and negative.

Designed to capture social media engagement.

Weeks 2-4:

The noise moves on to the next thing.

Builders who used the tool beyond surface-level validate (or invalidate) it.

The signal emerges.

If builder sentiment stays positive and gets louder week 3?

That's your cue.

Recent Signals

Claude Skills launched mid-October.

Claude Opus 4.5 launched late November.

Noise quieted, but both re-emerged as trending topics on social in late December.

Validated by the people using them for real applications.

The lag is your filter.

Use the Lag

Ignore week 1 hot takes.

Wait until week 3.

If builders are still positive, pay attention.

You won't fall behind by waiting...

You'll save energy to spend going in-depth with the best tools.

Let other people burn out chasing launches.

Wait for validation.