[#7] Why Your Perfect AI Prompts Are Backfiring

PLUS: ChatGPT's 2.5B daily prompts, Amazon's AI promotion mandate, and Trump's growth-first policy shift.

Riley

![[#7] Why Your Perfect AI Prompts Are Backfiring](/content/images/size/w1200/2025/07/promptstuffer-strategist-chasingnext.png)

What's inside this week

- 4 high-signal AI announcements, from new US AI policy to Amazon's AI mandate

- Learn why your detailed prompts are backfiring. Hint: instruction overload

- Trend to Watch: people are starting to talk like AI

- The low-risk AI integration strategy to avoid legal headaches

Just the Signals

1. Trump's AI Action Plan Prioritizes Growth over Protections

Trump's AI Action Plan shifts sharply from Biden's cautious approach, prioritizing AI infrastructure buildout and deregulation over safety guardrails. The plan threatens to limit federal funding to states with strict AI regulations and aims to expedite data center construction, even on federal lands. It also mandates that government AI contracts go only to providers ensuring "objective" and "unbiased" systems.

Source: TechCrunch

Why it matters

This signals a shift in federal AI policy from safety-first to growth-first. For business leaders, this means faster AI infrastructure deployment, but potential regulatory uncertainty as federal and state policies conflict. Companies building AI systems may face new "objectivity" requirements for government contracts while benefiting from reduced environmental and safety restrictions.

What to do now

If your company has government contracts, review current AI systems for potential "bias" issues that could affect future procurement eligibility.

For infrastructure planning, consider how expedited data center permitting might impact your AI scaling strategies.

Start tracking state-level AI regulations in your operating jurisdictions to prepare for potential federal-state conflicts.

2. Google's AI Features Drive Record User Engagement

Google reported that AI is "positively impacting every part of the business" with AI Overviews reaching 2 billion monthly users and AI Mode surpassing 100 million monthly active users. The company increased its 2025 capital expenditure plans to $85 billion (up $10 billion) to accelerate data center construction and AI infrastructure investment.

Source: The Verge

Why it matters

Google's user adoption numbers indicate that AI features are becoming a core part of their offerings. The $10 billion infrastructure increase signals that AI demand to keep up is exceeding even Google's aggressive projections.

What to do now

Try Google's AI Mode if you haven't yet. It's different from ChatGPT and AI overviews, and worth using as an up-to-date research tool.

Budget for AI infrastructure costs. Google's $10 billion spending increase signals that AI compute costs aren't coming down soon. If you're planning AI implementations, factor in rising infrastructure expenses and potential vendor price increases.

3. ChatGPT's Massive Scale: 2.5 Billion Daily Prompts

OpenAI revealed ChatGPT processes 2.5 billion prompts daily (up from 1 billion in December 2024). With 330 million daily queries from U.S. users alone and 500 million weekly active users globally, ChatGPT now ranks as the fifth-most-visited website worldwide.

Source: Mashable

Why it matters

This usage explosion shows AI adoption doubling in just seven months, signaling that AI tools are becoming standard business infrastructure and a fundamental shift in how people access information.

What to do now

Explore beyond ChatGPT - with its 62.5% market dominance, consider testing alternatives like Gemini, Claude, or specialized AI tools that competitors might be using. If you're only using one AI tool, you're missing opportunities.

4. Amazon's AI Mandate for Promotions Signals Corporate Shift

Amazon's Ring and smart home divisions now require employees to demonstrate AI usage to qualify for promotions, with managers needing to show how they "accomplish more with less" using AI. Simultaneously, Amazon's AWS division is cutting hundreds of jobs while reallocating $100 billion toward AI infrastructure investment this year.

Source: Business Insider

Why it matters

This represents a fundamental shift in HR practices where AI skills become mandatory for career advancement. Amazon's simultaneous layoffs and AI investment show how companies are restructuring human capital to fund AI transformation. Other major employers are likely to follow this model of AI-driven performance evaluation.

What to do now

Start documenting your AI usage now - track specific tools, time saved, and measurable outcomes for your next performance review.

If you're in management, prepare to demonstrate how AI tools help your team deliver more value with existing headcount. Amazon's model of requiring AI competency for advancement is likely to spread across other major employers.

Your Advantage This Week

Why Your Detailed Prompts Are Backfiring

People think the secret to better AI results is writing longer, more detailed prompts. They stuff every requirement, context clue, and formatting preference into a single request, expecting AI to juggle it all perfectly.

Here's what actually happens: AI hits a cognitive ceiling around 150 simultaneous instructions, and your detailed prompts start working against you.

The Study:

Researchers tested leading AI models (GPT-o3, Gemini 2.5 Pro, Claude) with increasing instruction complexity: 10, 50, 150, 300, and 500 simultaneous tasks.

Key Finding:

Even frontier AI models hit a performance wall around 150 instructions.

Why Your Detailed Prompts Backfire:

A prompt like "Analyze this market data, identify 3 key trends, suggest positioning strategy, write executive summary, keep it under 2 pages, match our brand voice, avoid jargon, include competitor analysis" is actually 8+ competing priorities AI has to juggle.

What Happens During AI Overload:

- Primacy bias: Earlier instructions get priority over later ones

- Omission errors: AI skips requirements rather than misinterpreting them

- Graceful degradation: Outputs appear polished but miss your key specifications

Critical Insight:

Prompt length ≠ prompt complexity

A 500-word prompt with one clear objective outperforms a 50-word prompt with 10 different requirements.

Practical Framework:

- 1-10 instructions: All models perform well

- 10-30 instructions: Most models handle effectively

- 50-100+ instructions: Only frontier models maintain accuracy

- 150+ instructions: Even top models miss critical requirements

Strategic Recommendations:

- Prioritize ruthlessly: Put your most critical requirements first

- Leverage reasoning models: GPT-o3, Gemini 2.5 Pro perform better on complex tasks

- Chain focused prompts: Use multiple prompts with fewer instructions each

- Remember: Large context windows ≠ higher instruction-following capacity

Trend to Watch

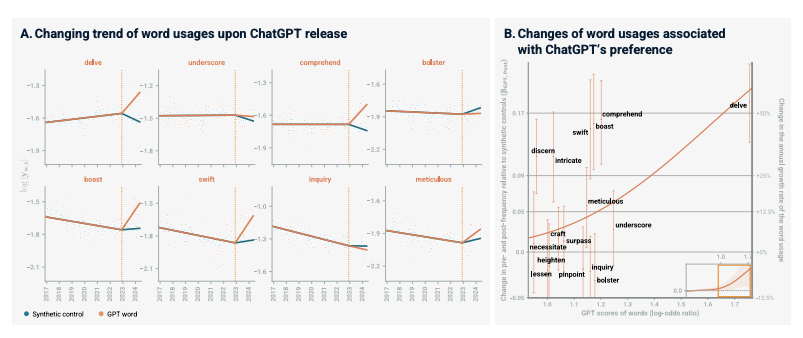

Humans Are Starting to Talk More Like ChatGPT

New research from Berkeley and Harvard reveals that AI language models are influencing human writing patterns. Analyzing millions of posts on platforms like Reddit and academic papers, researchers found humans increasingly adopt AI-style phrases, structures, and vocabulary—even when not directly using AI tools.

The study shows this "linguistic convergence" is happening faster than expected, with certain phrases and sentence patterns becoming more common in human writing after gaining popularity through AI interactions.

Why it matters

This linguistic shift shows AI's cultural impact beyond just productivity gains. As AI language patterns become normalized in human communication, it could affect everything from business writing standards to how we evaluate "authentic" human content. For professionals, understanding these patterns helps you consciously choose when to embrace or avoid AI-influenced communication styles.

What to do now

Pay attention to your own writing patterns and those of your team. Consider developing style guides that intentionally preserve human voice characteristics that matter to your brand.

When AI assistance produces overly formal or generic language, edit deliberately to keep your authentic voice.

One More Thing

The Low-Risk AI Implementation Strategy

Most corporate AI discussions get stuck on two extremes: either avoiding AI completely due to data privacy concerns, or jumping into flashy implementations that trigger legal and compliance nightmares.

But there's a third path that smart organizations should be taking: finding the sweet spot where AI delivers gains without touching sensitive data or creating public-facing risks.

Target processes that are:

- Repetitive but not customer-facing

- Data-heavy but not PII-sensitive

- Time-consuming or unattainable

Think: automating budget roll-ups between teams, streamlining briefing processes that currently take 3 days of email tennis, building performance alerts that surface issues before they blow up, or creating searchable institutional knowledge that doesn't walk out the door with employees.

These aren't glamorous AI applications, but they're the ones that will save hours per week while avoiding the barriers that kill corporate AI projects.

Why it matters

Many organizations are paralyzed by AI's risks instead of capitalizing on its opportunities. Companies can gain leverage now by identifying internal workflow improvements that deliver measurable value without crossing compliance red lines. This approach builds AI competency and confidence while avoiding the legal, privacy, and brand risks that come with customer-facing implementations.

What to do now

Map your team's time drains. Look for recurring work like budget compilation, status updates, competitive monitoring, weekly summaries.

Identify non-sensitive data flows. Find processes where you're moving information between systems that doesn't involve PII, customer data, or highly confidential business intelligence.

Not a subscriber yet? Join here for weekly insights on AI, strategy, and the changing workplace.

Found this useful? Forward it to a teammate who’s figuring out AI too.