5 Things You Should Know in AI This Week — November 21, 2025

Simplifying the noise. Here are five signals that matter for non-technical workers.

What Happened This Week

1. Google Releases Gemini 3 - The New Top Model

Google released Gemini 3 this week.

Their new foundational AI model has major upgrades in reasoning and multimodal understanding (text, images, audio, and video).

Gemini 3 comes in two variants:

- Gemini 3 Pro (the flagship, available in preview now)

- Gemini 3 Deep Think (enhanced reasoning mode for complex tasks)

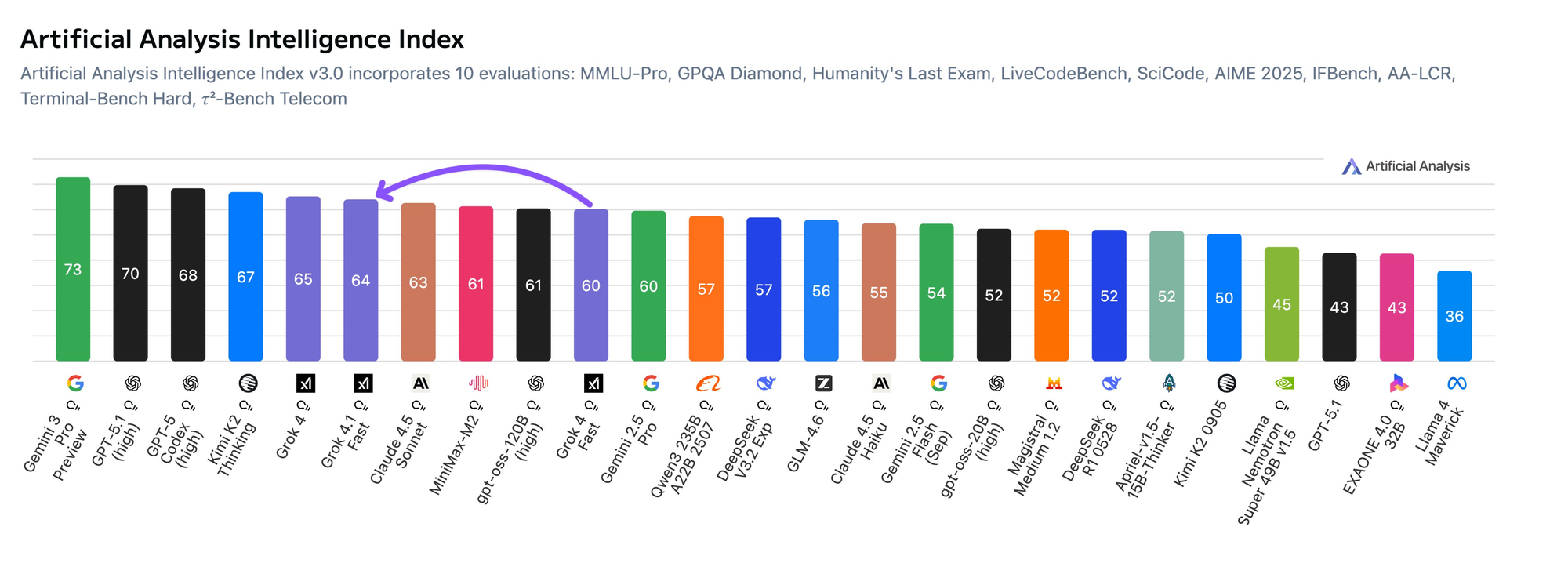

Benchmarks show Gemini 3 outperforming GPT-5 and Claude Sonnet 4.5 on reasoning tasks (ARC-AGI and GPQA).

Deep Think mode is the standout feature.

It takes extra time to work through multi-step problems for extended planning, coding, or analysis.

This is Google's answer to OpenAI's o1 reasoning models.

Here's what people are saying Gemini 3 is good and not so good at:

| What Gemini 3 is Good At | What Gemini 3 is Not So Good At |

|---|---|

| Solving hard, multi-step problems (reasoning through messy questions instead of just guessing). | Can feel slow or heavy when you just want a quick, simple answer or short chat reply. |

| Looking at lots of information at once (long docs, long chats, big projects) and keeping track of context. | Sometimes sounds very confident even when it’s slightly wrong, so important details still need checking. |

| Working across text, images, screenshots, and video in one go (great for decks, UIs, PDFs, whiteboard photos). | Not always the very best at following super-strict instructions or formats compared with some rivals. |

| Helping with work tasks: summaries, analysis, comparisons, planning projects, simple data or code help. | Creative/emotional writing (stories, marketing copy with a strong “voice”) is good but not top of the field. |

| Acting as a practical assistant that can reason, plan, and drive tools/agents for multi-step workflows. | Some advanced features/limits (quotas, special modes, dev tools) are confusing or not visible to everyone yet. |

Google already embedded Gemini 3 into AI Search results.

For Workspace users, Gemini 3 is rolling out across Docs, Sheets, Gmail, and Meet.

In other huge news, Google also released its newest viral image model: Nano Banana Pro (built on Gemini 3).

The model is another step forward for AI image generation - handling up to 14 visual references, producing 4K resolution, and improved text rendering.

The signal?

Google was expected to have the top model by year-end.

They delivered.

This release isn't just aimed at chipping away at OpenAI's consumer market.

With Gemini 3, Google is making a serious enterprise play too.

Earlier this year, they held 20% of enterprise customers compared to Claude (32%) and ChatGPT (25%).

Gemini 3 has already been making up ground, but doubling down on logic and reasoning will continue to close the gap.

But Google's launch also shows they're balancing consumer use too.

Beyond the Nano Banana Pro drop, Gemini 3 demos included practical applications like vacation planning and gift shopping.

If you've never tested Gemini or Nano Banana, now's the time.

Try Gemini 3 for multi-step tasks: analyzing data, building project plans, critiquing strategy documents, or structuring complex emails.

Notice how it handles reasoning differently from ChatGPT or Claude.

While model capabilities are becoming tougher to notice, they still have different personalities and approaches.

2. xAI Releases Grok 4.1 with Personality and Reasoning Upgrades

xAI released Grok 4.1 on November 17.

This isn't a foundational model launch like Gemini 3.

It's an incremental upgrade to Grok 4.

Focused on better reasoning, multimodal understanding, emotional intelligence, and fewer hallucinations compared to earlier Grok versions.

It also jumped ahead of Claude 4.5 Sonnet in Artificial Intelligence's rankings.

Grok 4.1 includes two variants:

- Grok 4.1 (base model)

- Grok 4.1 Fast (optimized for tool-calling and agentic workflows)

The model now handles a 2 million token context window, meaning it can process extremely long docs and conversations.

There's also an Agent Tools API for orchestrating external tools like search, web scraping, and code execution.

The personality shift:

Grok 4.1's tone is seen as more collaborative and emotive vs the edgier, playful style Grok was known for.

It's still expressive, but xAI is positioning it for more serious use cases that it hasn't been considered for previously.

Think finance, customer service bots, or government work.

This adjustment is important because personality is a competitive differentiator.

Here's where Grok 4.1 stands in performance:

| What Grok 4.1 is Good At | What Grok 4.1 is Not So Good At |

|---|---|

| Quick, conversational answers with a natural, empathetic style. | Can miss details in complex or highly technical programming tasks. |

| Creative writing and catchy wording (stories, headlines, hooks). | Sometimes less reliable at formal/academic tone or precision formatting. |

| Understanding and responding to emotion and intent in conversation. | Still hallucinates; important info must be double-checked. |

| Faster-than-average response time, even under load. | Image uploads / large files can be inconsistent. |

| Good at long, coherent chats—keeps personality & context well. | Tool use + productivity workflows are less polished than some rivals. |

| Real-time search integration for current events. | Can embellish or generalize when facts aren’t clear, especially in long summaries. |

The signal?

As we saw last week with Kimi K2, the model race isn't just OpenAI vs Claude vs Google anymore.

xAI is pushing hard on long context, tool integration, and agents.

And while most corporate teams don't use xAI products, there's no doubt Grok remains a big player in the broader AI landscape.

For now, this is a "watch" story.

Knowing where Grok stands helps you understand the competitive dynamics and opportunities in play as the landscape evolves.

3. Bezos Returns as CEO for New AI Startup Focused on Manufacturing

Jeff Bezos is back in a CEO role.

He's co-heading a new AI startup called Project Prometheus.

Focused on applying AI to engineering and manufacturing in aerospace, automotive, and other physical industries.

The company has reportedly raised around $6.2 billion in funding and hired 100 employees from top AI labs.

This is Bezos' first major operational role since stepping down as Amazon's CEO.

The signal?

We've seen plenty of talk about robots and manufacturing automation, but not much real-world implementation yet.

This move is a credibility signal that physical-world AI is the next phase.

It's Bezos, so it's worth paying attention to.

For now, this is a directional watch that shows where AI investment and capability are headed next.

4. Target Launches Shopping Inside ChatGPT + New Enterprise Ops

Users will be able to ideate, browse Target products, and check out all in the dedicated Target app inside ChatGPT.

This takes advantage of OpenAI's October Apps SDK announcement.

If you use ChatGPT, you'll be able to ask something like:

"Help me plan a family holiday movie night with Target."

ChatGPT will pull up the Target app and will suggest movies, popcorn, cozy blankets, etc.

You can browse, adjust, and buy through your Target account with Drive Up, Order Pickup, or shipping.

The duo also shared how Target is using ChatGPT to help operations:

- Agent Assist and Store Companion help service center and store teams answer questions, price match, process returns, and resolve issues faster

- Shopping Assistant and Gift Finder use AI to personalize product recos based on interests, age, or occasion

- Guest Assist and JOY (trained on 3,000+ FAQs) help customers and vendor partners resolve questions instantly

The signal?

This is what an early, coordinated AI strategy looks like.

AI as a sales channel (shopping in ChatGPT) and AI as operational support (service teams, vendor relations, personalization) running in parallel.

For other orgs, this is inspiration and a directional signal:

Partnerships will increasingly put AI at the center of the customer experience.

Beyond rethinking brand touchpoints, your company should immediately consider if creating an in-app experience using OpenAI's Apps SDK makes sense.

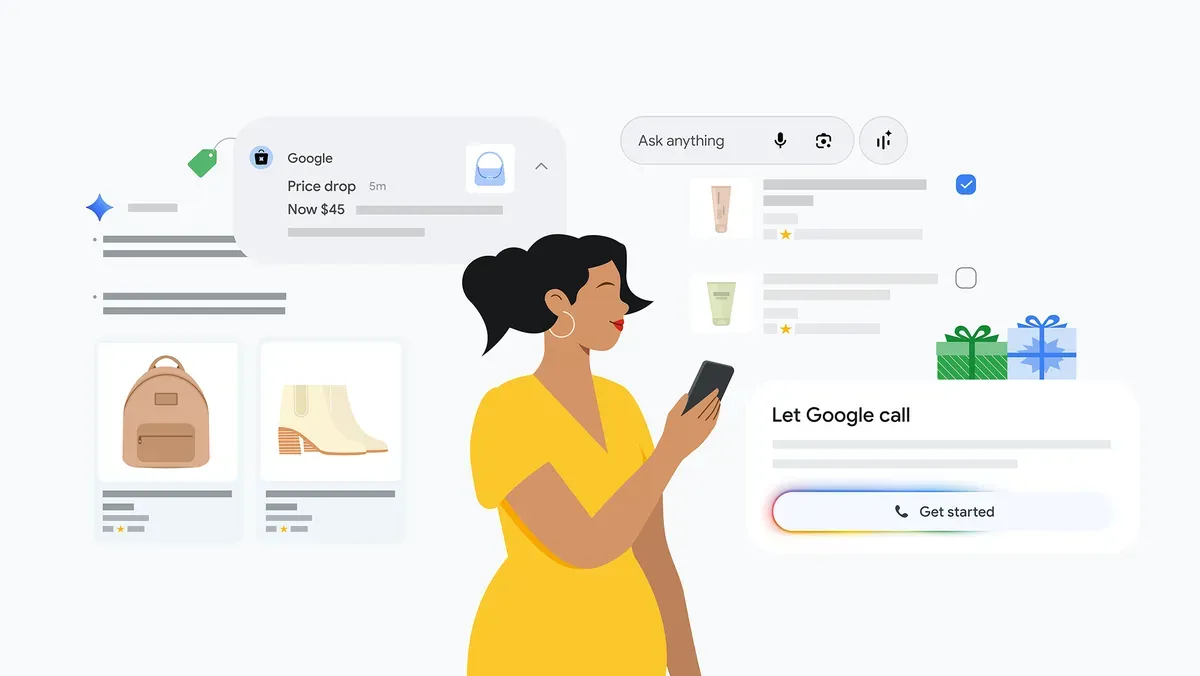

5. Google Upgrades AI Shopping for Holiday Season

Google rolled out major AI shopping updates ahead of the holidays.

What changed for shoppers:

Google's AI Mode and Search now surface curated product recos based on your query, budget, and preferences.

You ask: "find gifts for my office under $50."

It gives side-by-side options with reviews, pricing, features, and availability.

Results also show buy buttons and ability to built your basket right in search.

Plus you can interact directly with the results to ask questions and refine your options.

What changed for brands:

Structured product data will be super important for this new version of SEO.

To show up in AI results, brands need rich product feeds.

Things like detailed descriptions, accurate pricing, real-time availability, and robust reviews are critical.

Gemini uses this to generate results, which means clean, complete product info can outpace older SEO tactics in driving traffic or sales.

Brands will also compete directly in side-by-side AI comparisons, making clear differentiation (price, quality, reviews) huge for winning purchases.

Shoppers can buy without visiting your website, so brands must provide conversion-ready info for AI-first shopping environments.

The signal?

For consumers, shopping will become even more frictionless.

For brands, data quality becomes as important as keywords and backlinks.

If you touch e-comm: make sure your product feeds are clean, structured, and detailed.

That's it for this week. If you're getting ahead of holiday shopping, this is a great opportunity to play with Google's new shopping features.

I'm excited to see what deals I can find on Black Friday.

-Riley