5 Things You Should Know in AI This Week — December 19, 2025

Simplifying the noise. Here are five signals that matter for non-technical workers.

What Happened This Week

Try the interactive version →

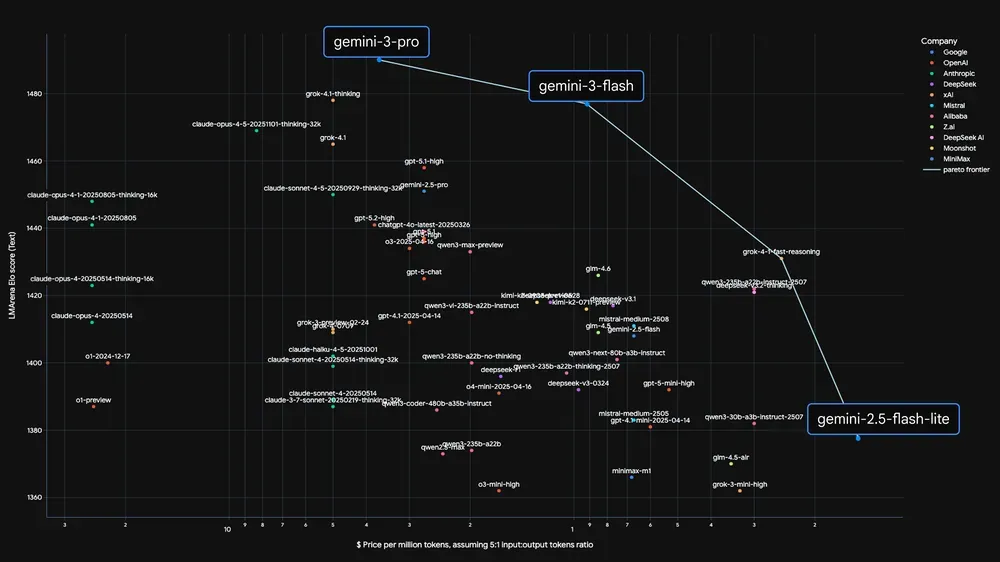

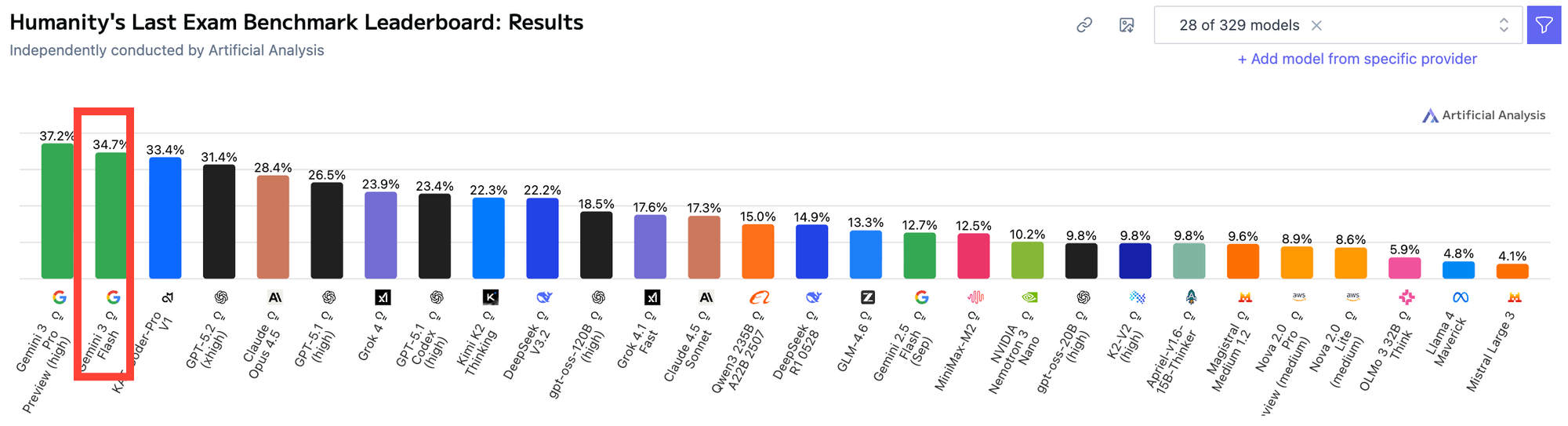

1. Google Rolls Out Gemini 3 Flash as New Default Model

Google launched Gemini 3 Flash, a faster and cheaper AI model that's now the default in the Gemini app and Google's AI Search Mode.

This is a big upgrade from Gemini 2.5 Flash.

Flash performs close to Gemini 3 Pro on benchmarks, with gains in reasoning, coding, and general knowledge.

The numbers:

- 34.7% on Humanity's Last Exam (beating OpenAI GPT-5.2 High's 31.4%)

- ¼ the price of Gemini 3 Pro

- 3x the speed of Gemini 3 Pro

The signal?

Google's putting OpenAI on their toes.

Week after week, Google's been rapid-firing updates and advances.

OpenAI still holds the consumer market share lead and switching is hard.

But Google has distribution advantages that OpenAI doesn't.

The past few months of moves are a great balance of going after OpenAI in both the consumer and enterprise markets.

Tough to do.

The speed and capability combo is what to focus on with this release.

Flash matching OpenAI's model release from last week on performance at faster speeds and lower costs is a big win for Google.

For Gemini users, your default experience just got a big upgrade.

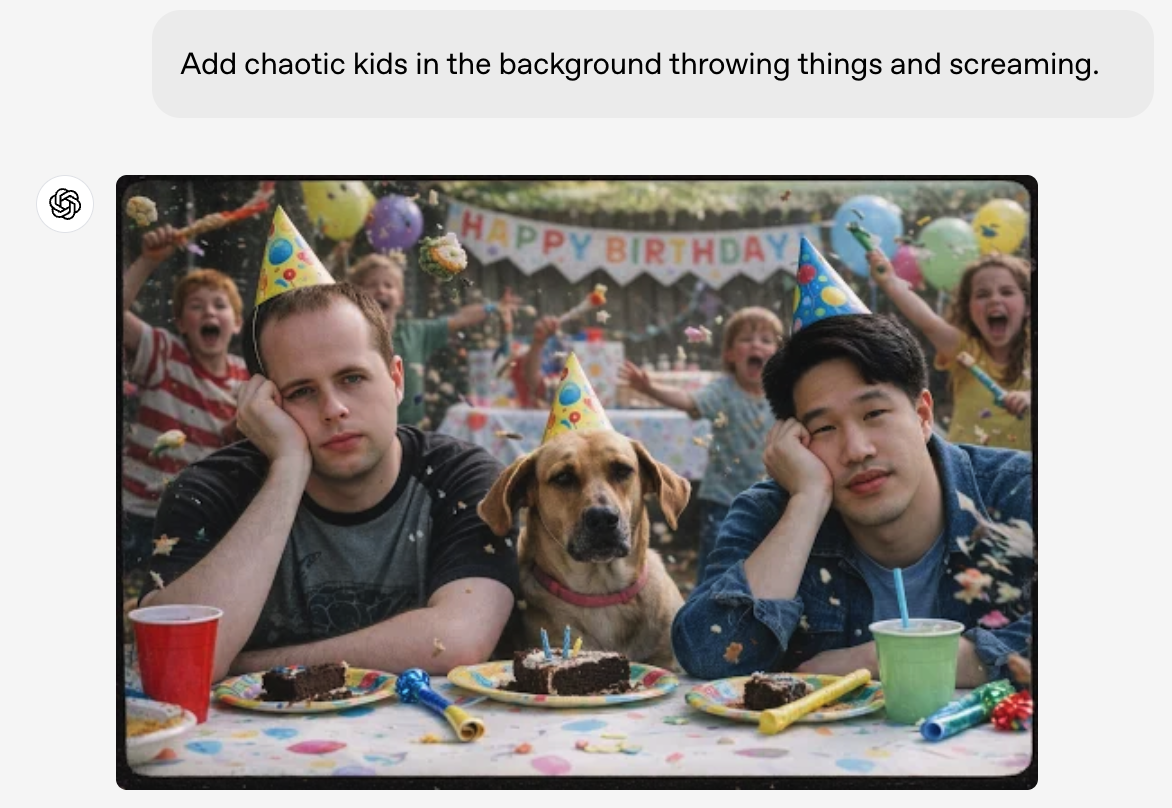

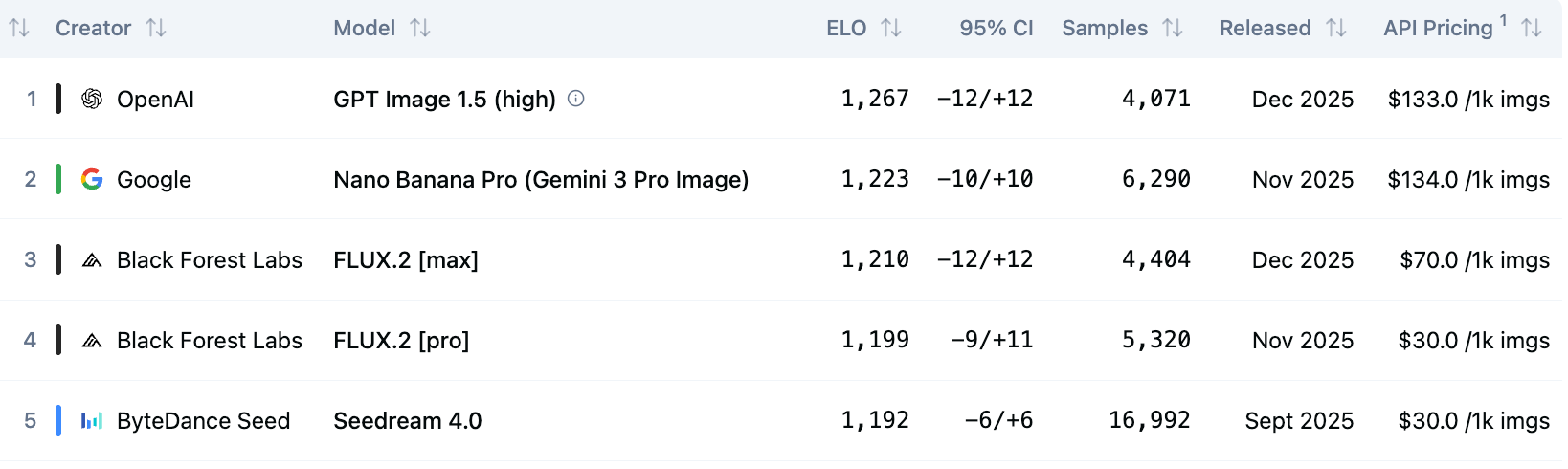

2. OpenAI Releases GPT-Image-1.5 Model

OpenAI released GPT-Image-1.5, its newest image generation model available in ChatGPT and through the API.

The model brings upgrades in generation speed and editing abilities.

This is OpenAI's counter to Google's November Nano-Banana-Pro release.

Key improvements:

- 4x faster than before

- Stronger instruction following

- Better editing (adding, subtracting, combining, transposing)

- Detail preservation (faces, lighting, composition across edits)

- Improved text rendering (varied text sizes, infographic building)

GPT-Image-1.5 now ranks first on Artificial Analysis's text-to-image and editing leaderboards.

The signal?

Another step forward in AI-generated content use for higher-stakes work.

For individuals creating images, this is a broadly useful upgrade.

The speed improvement alone is a huge win.

Take your team from users to builders.

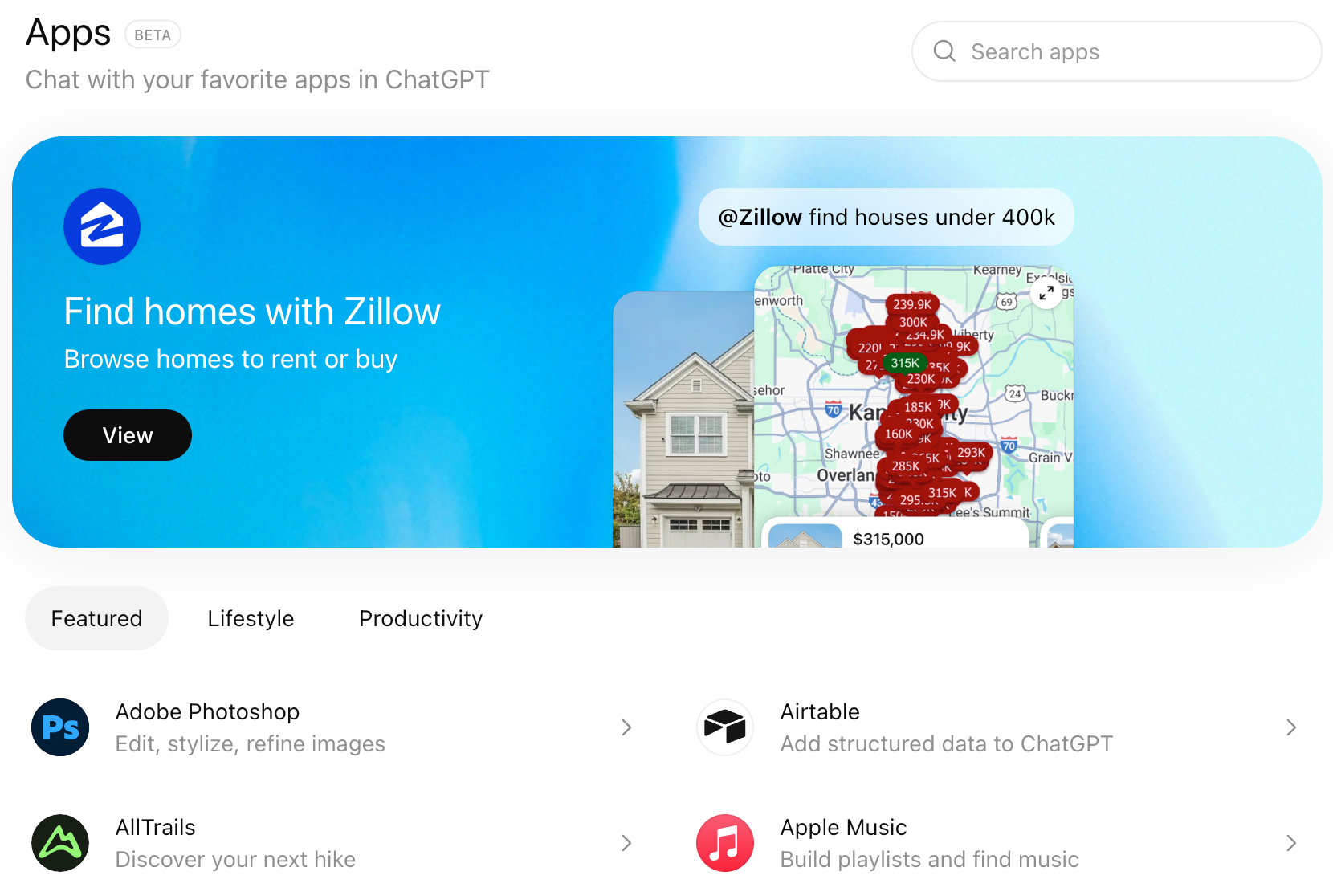

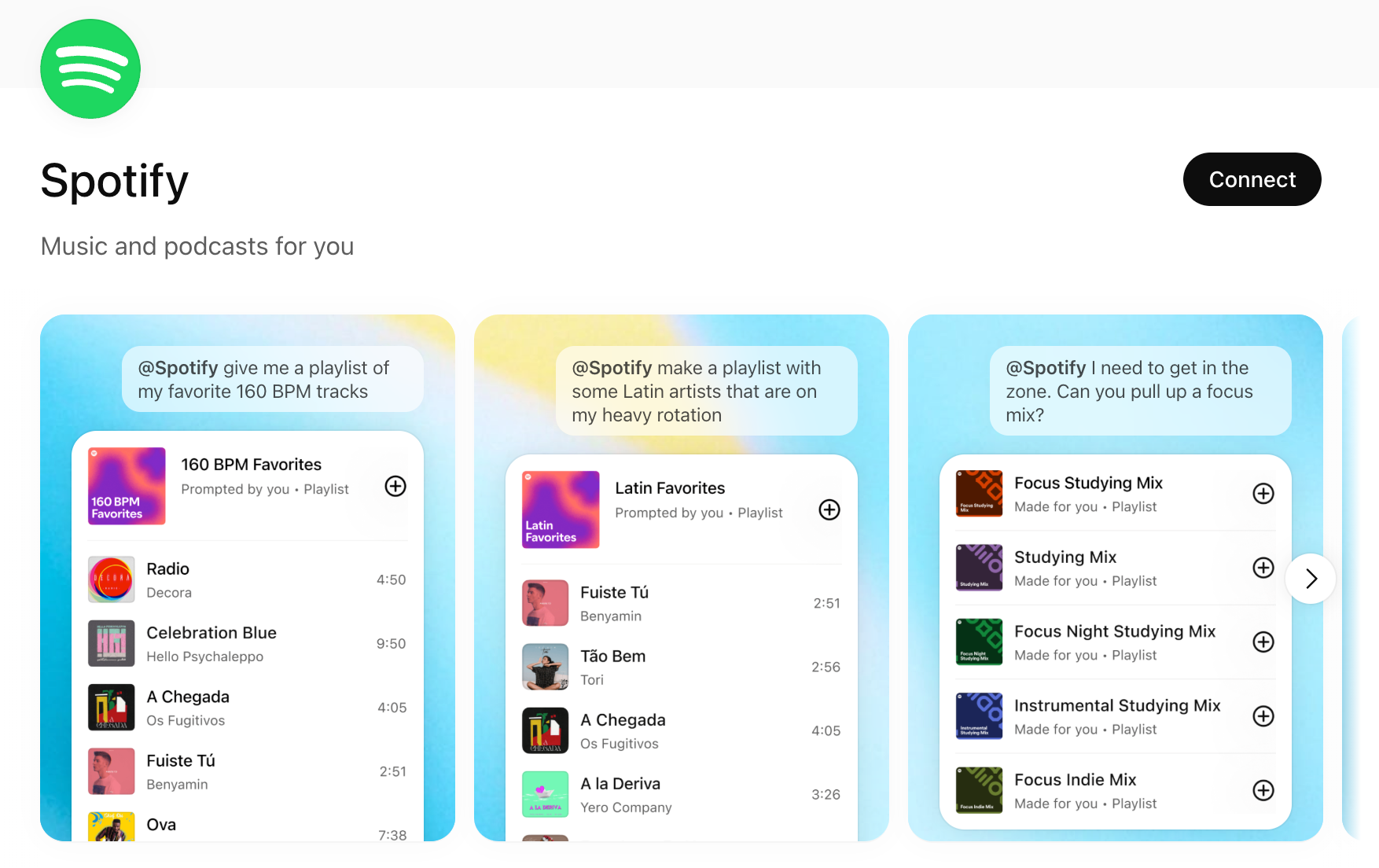

3. ChatGPT's App Store is Finally Here

OpenAI just launched their app directory inside ChatGPT.

It's live now on iOS, Android, and web.

This is a moment OpenAI's been building toward since announcing their Apps SDK in October.

It will allow people to use third-party apps directly inside ChatGPT conversations.

Apps like Booking.com, Spotify, Dropbox, Apple Music, DoorDash, Photoshop, and Canva are available now.

To use an app, click it, hit "Connect," authorize access, then start a chat.

Once connected, mention any app with "@" to bring it into a conversation.

OpenAI also opened app submissions to third-party developers.

Apps submissions will go through review and must follow OpenAI's guidelines (privacy policies, no ads or tracking, appropriate for teens, etc.), similar to other app stores.

For now, developers can only monetize by linking out to their native app or website.

OpenAI says internal monetization options are coming later.

The signal?

This moment isn't grabbing the headlines, but it's a big step forward.

ChatGPT is moving from a Q&A tool to something closer to an operating system.

Instead of switching between your apps, you'll increasingly use them within your AI interface.

Beyond efficiency, it'll make the whole experience visually better.

A hint of where I think AI is headed down the road.

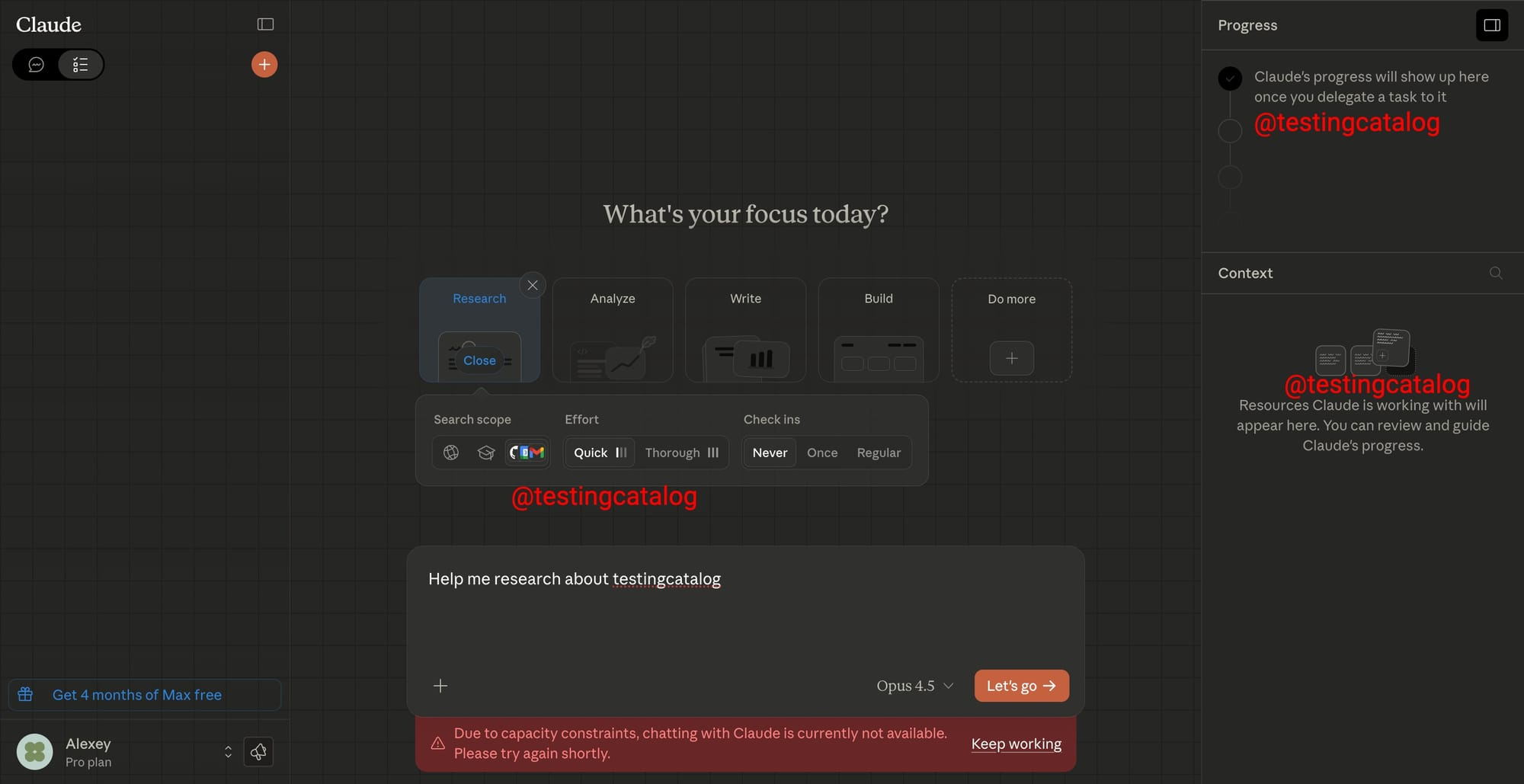

4. Anthropic Tests Agentic Tasks Mode in Claude

Anthropic is testing a new Agentic Tasks Mode for Claude.

The update adds a toggle between normal chat and agent mode within their chat interface.

It offers five specialized work paths in Agent Mode: Research, Analyze, Write, Build, and Do More.

Users get to control sources, effort level, interaction frequency, and output formats.

Also, a progress tracker shows how Claude breaks down each task.

The signal?

Anthropic is not new to tools like this.

Technical users rave about the structured planning capabilities they've built into Claude Code.

Allowing AI to plan and execute tasks without micromanaging.

This feature would bring similar abilities into the chat interface, allowing non-technical workers to benefit too.

This is similar to their Skills product rollout, which was another evolution of bringing consistent execution to non-technical users.

Creating features that make AI more functional and trustworthy is a smart enterprise play.

Not the splashiest stuff, but very necessary.

We'll see if and when it rolls out to more people.

5. Numbers Worth Knowing This Week

- Enterprise gen AI spend was $37B in 2025, up 3.2x from 2024.

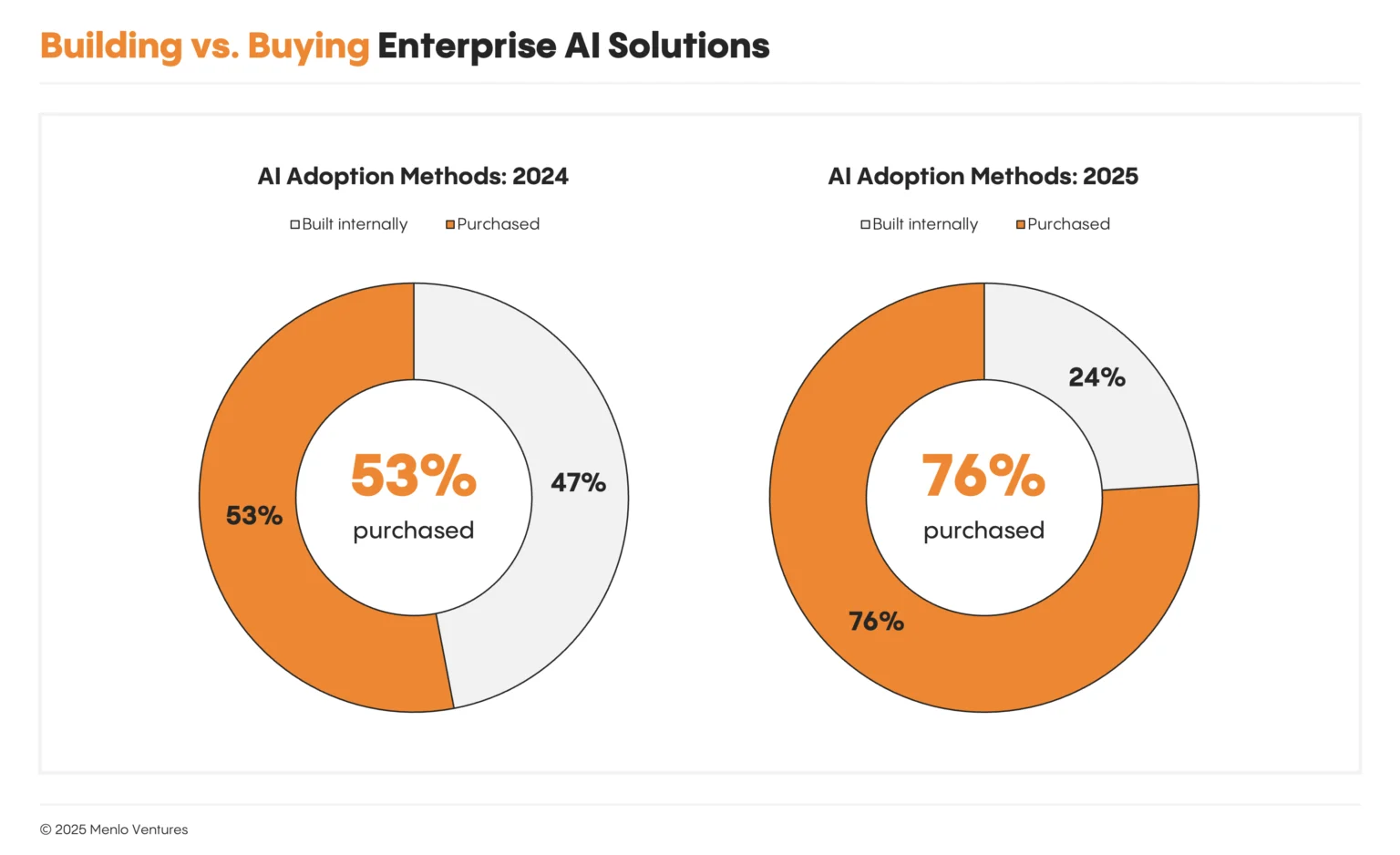

- Enterprises buy 76% of AI solutions vs building 24% (a shift from LY).

- Nearly half AI deals move to production, 2x traditional SaaS products.

- Anthropic dominates enterprise AI with 40% market share and 54% in coding, ahead of OpenAI's 27% and Google's 21%.

- Just 16% of deployments are true AI agents.

- Source: Menlo Ventures

- Google and MIT found that adding more agents isn't always better.

- In multi-agent systems, once a single agent hits 45% accuracy, adding more can reduce performance as coordination costs outweigh benefits.

- Source: Google and MIT

- OpenAI and Amazon are in talks over a potential $10B+ investment that would value OpenAI above $500B.

- The deal would help OpenAI cover massive infrastructure costs, including a $38B commitment to AWS servers over seven years.

- Talks also cover OpenAI using Amazon's Trainium chips and potential commerce integrations.

- Source: CNBC

Turn This Newsletter Into Your Personal Briefing

See exactly how each story impacts your role and industry

Button not working? View on website

This newsletter is becoming the Google and OpenAI roundup.

Shows just how dominant and competitive these two have become late this year.

Looking forward to the other players getting back into the arena next year.

Have a great weekend.

-Riley